As the AI race continues to heat up, some companies have quickly established themselves as leaders, each with differentiated value propositions. Among those is Anthropic, which was founded in 2021, with a special focus on safe AI.

Company Background

Holding the number five position in the Acceleration Economy list of AI Ecosystem Top 12 Pioneers, Anthropic offers AI systems and models, including its Claude 3 chatbot, a competitor to Google’s Gemini and OpenAI’s ChatGPT.

Claude 3 uses a unique approach called “constitutional AI,” which trains the AI to be ethical. Anthropic is headed by CEO Dario Amodei, and the startup raised more than $7 billion since 2023. Investors include Amazon, Google, and Salesforce.

Ironically, Anthropic was founded by former OpenAI employees including Amodei who left due to concerns over safety and ethical considerations related to AI development. This trend has continued, with more OpenAI employees and executives leaving recently while citing similar concerns.

Anthropic’s emphasis on AI safety, transparency, and ethics is echoed throughout the industry —it’s a key aspect of the recent AI executive order from The White House. Given that focus and passion from the Anthropic founders and organization, I’m going to analyze the organization and what makes it unique from my CISO perspective.

Claude 3: Anthropic’s Secure and Versatile AI Chatbot

First and foremost is Anthropic’s family of AI models, Claude 3. The industry has framed Claude 3 as a ChatGPT competitor; it offers not a single model but three different ones, each with various capabilities and performance metrics.

Anthropic positions the three models on a spectrum of intelligence and cost, with Haiku at one end — light and fast — and Opus at the high end — the most intelligent model in the trio, aimed at more complex analysis.

Having three versions offers unique value to customers and consumers because they can make their selection based on individual performance and cost requirements.

Claude has built-in, robust API security and has pursued industry compliance frameworks such as Service Organizational Control 2 (SOC2) Type 2. It also offers accessibility through leading cloud service providers such as AWS Bedrock.

On the trustworthy and reliability front, Claude claims to be 10 times more resistant to jailbreaks (a technique that can be used to evade safety guardrails put in place by LLM developers) and misuse than leading competitors. It even offers copyright indemnity protections for its paid services, as well as low hallucination rates.

These features are key because they demonstrate that the Claude models were built with security in mind, addressing some of the key risks identified in sources such as the Open Web Application Security Project (OWASP) Large Language Model (LLM) Top 10 Security Risks, as well as general industry heartburn with LLMs producing incorrect and invalid outputs due to hallucinations.

Prioritizing AI Safety Over Profit

Anthropic’s AI safety mission is distinctly different from some peers, who may make public statements about AI safety but don’t always align with those words amid competing priorities such as profitability, market share, and revenue.

Anthropic’s organizational structure was set up with its safety mission in mind, including being a public benefit corporation and having a special class of shares oriented around its establishment as a long-term benefit trust.

It’s a differentiated position, particularly as some leading competitors have lost top talent and executives who have publicly expressed concerns about AI safety and security while prioritizing other goals, such as the pursuit of artificial general intelligence (AGI).

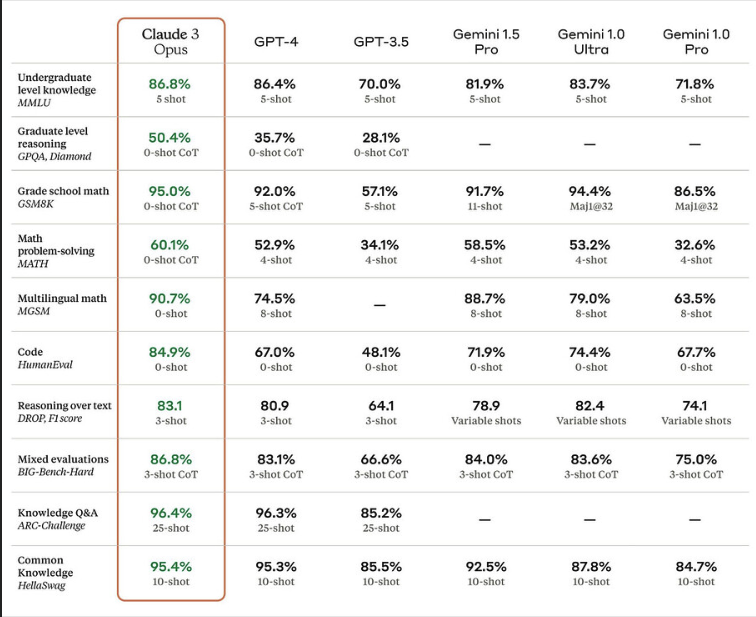

Opus Model Is Performance Leader

Another key differentiator is performance. The OpenAI Developer forum has robust conversations comparing GPT4 with other models, including Claude’s Opus. Claude 3’s Opus model outperformed GPT4 in several of the forum’s benchmarks, demonstrating superiority in multilingual math, coding, reasoning over text, and mixed evaluations.

The AI Ecosystem Q1 2024 Report compiles the innovations, funding, and products highlighted in AI Ecosystem Reports from the first quarter of 2024. Download now for perspectives on the companies, investments, innovations, and solutions shaping the future of AI.

This isn’t to say one model is better than the other for most or all use cases. There are, of course, key considerations when choosing between models. For example, code quality varies greatly from sources, a topic I have covered before. Low code quality means vulnerabilities or flaws that can be exploited.

Closing Thoughts

While the AI race is in its infancy, it’s clear that Anthropic has earned its stature among the Top 12 AI Pioneers and has some important differentiators for security leaders to consider. These include a fundamental grounding and commitment to AI safety, trust, and transparency, in addition to robust funding and performance advantages over some competitors. These are all necessary to provide a level of assurance in the models you’re using, the organizations providing them, and the potential quality of outputs, such as code produced by the models.

Additional Insights on AI Ecosystem Top 12 Pioneers:

- Microsoft Tech Innovations and Partnerships Extend Pioneering Track Record to AI

- NVIDIA’s Comprehensive Tech Stack, Ecosystem Partnerships Solidify Position Atop AI Pioneers

- Top 12 AI Pioneers Expand Ecosystems, Continue Tech Innovation in June