GenAI has been the biggest driver of tech industry innovation this century, with remarkable progress and advances taking place in the less than three years since ChatGPT burst onto the scene.

Despite the progress and innovation surrounding GenAI, security has been a looming concern: Microsoft Data Security Index Report finds that more than 80% of business leaders point to potential leakage of sensitive data as their main concern regarding GenAI.

The good news: An increasing number of initiatives are playing out to enhance the security of GenAI, as well as AI agents that act with a higher level of autonomy than earlier generations of the technology. Other recent developments include:

And those are just three recent developments we’ve highlighted.

Now, Microsoft is outlining specific measures customers and partners can take to leverage its Purview data security and governance platform to protect data in GenAI applications and use cases.

This analysis will present several of those recommended measures, which fall into three categories: utilizing Purview’s AI Hub, AI Analytics, and Policies functionality.

AI Agent & Copilot Summit is an AI-first event to define opportunities, impact, and outcomes with Microsoft Copilot and agents. Building on its 2025 success, the 2026 event takes place March 17-19 in San Diego. Get more details.

Security Risks Introduced by GenAI

In an online event held last week, Microsoft set the context for use of Purview to protect data in GenAI use cases by detailing some of the security risks that come with the groundbreaking technology. They include:

- Overexposure of data by negligent or uninformed insiders who might create documents without appropriate access controls, thereby making it easy for other users to reference that document in a Large Language Model (LLM) or Copilot

- Data leaks precipitated by disgruntled insiders who might use GenAI to find confidential information then proceed to leak that same information

- Data leaks by negligent insiders, such as one who shares sensitive data in consumer GenAI apps

Such threats can be exacerbated in those companies that use a wide range of security platforms. Not surprisingly, Microsoft positions Purview as a turnkey security platform that can consolidate multiple functions in one system, but it can’t be denied that the current state of play — with an average of 10+ security platforms used to secure the typical corporate data estate — can lead to complexity, security gaps, and management challenges.

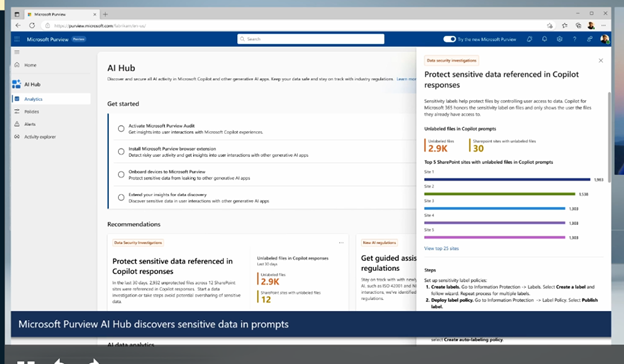

Purview AI Hub

As explained by Michael Lord, Security Global Black Belt at Microsoft, Purview AI Hub provides visibility into usage of GenAI and how data is being used within a company’s IT environment; this includes use of Copilot.

Information protection labeling functionality can be applied so that content access is controlled, limited only to the people that should have access to it.

For example, in SharePoint, content can be labeled using Purview information protection functions, with sensitivity labels applied when the content enters the SharePoint site, while also applying inheritance of these classifications to all incoming information. An admin can define who has access to individual documents. Enforcement of a data loss prevention, or DLP, policy would prevent an individual, for example, from cutting and pasting information into ChatGPT. If they did attempt to paste information, it would be blocked.

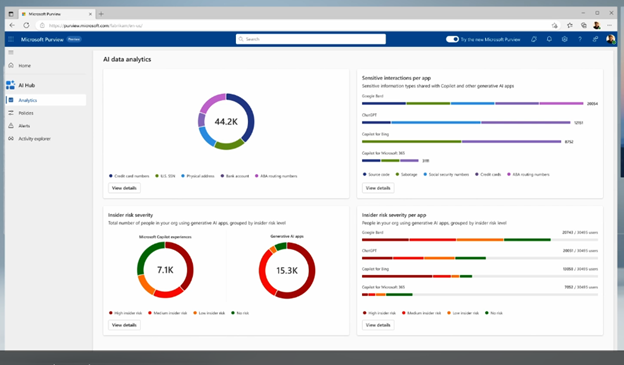

AI Data Analytics

AI Hub has the ability to report on activities related to data and applications in a GenAI context across the entire data estate, providing actionable analytic insights into behaviors, interaction with sensitive data, and how that applies to GenAI.

Analytics insights empower admins to prioritize critical alerts and gain awareness about any high priority data either leaving, or attempting to leave, a corporate security perimeter, then act accordingly.

Data analytics also provides a view on whether, and to what extent, non-compliant and unethical use of AI is occurring within the environment. “This aggregation of all of these alerts really does provide you with trends and give you good insight into AI interactions within the environment… further, it gives you concepts of sensitive interactions per app, so you can look at the different things that are are being used within the environment, the different generative AI applications,” Lord said during the virtual event.

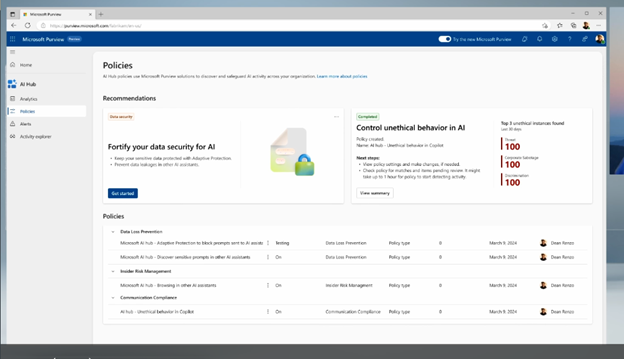

Policies

Admins can also configure policies that help prevent data loss that could occur through AI prompts and responses. Microsoft helps build sample policies — which can be modified allowing Purview solutions such as DLP policies and communication related to compliance. The goal: provide an integrated view of all of an enterprise’s AI actions together in a single, unified data protection strategy.

This includes applying policies to scan, classify, and label data consistently acoss the data estate, which can include Microsoft 365, Azure SQL, Azure Data Lake, Amazon S3 Buckets and other structured data sources.

Closing Thoughts

GenAI and the other AI apps and tools it has spawned, including AI agents, create new and potentially unknown security risks that go beyond the obvious or predictable. Especially in an agentic AI context, agents that take actions autonomously could have unintended consequences, including consequences that could potentially compromise security.

No one vendor or platform will be able to fully lock down all AI activities, applications, or tools, but comprehensive platforms such as Purview — and Microsoft’s targeted guidance for how to fully leverage Purview — are positive steps toward raising security awareness and ensuring customers are fully capitalizing on the tools they’re already using to proceed as securely as possible with AI use cases while maintaining a strong focus on innovating with AI technology.

Ask Cloud Wars AI Agent about this analysis