Emphasizing the critical role of Purview as an enterprise platform for protecting corporate data, Microsoft is adding AI functionality that helps customers investigate and mitigate data security incidents by accelerating the investigation process after a breach. Last week, it provided new insights on Purview Data Security Investigations, now in public preview.

Purview Data Security Investigations is one prominent addition to the Microsoft security lineup that was demoed at an online security event last week. As a range of company executives explained, the company is enlisting AI to fight the expanding attack surface and range of threats to combat attackers that are deploying AI for nefarious purposes. The enhancements come on the heels of a set of AI security agents delivered by Microsoft and partners.

Collectively, the company aims to simultaneously bolster its security product portfolio with AI functionality and strengthen customer confidence following major security challenges and missteps in the recent past.

In this report, I’ll detail Purview Data Security Investigations, as well as AI-focused enhancements to Entra identity management and Defender for AI services.

Purview Power

Purview Data Security Investigations leverages AI to analyze data at scale in order to launch and execute data security investigations quickly.

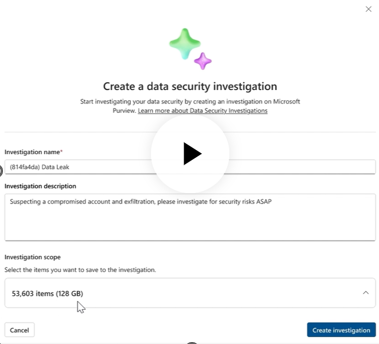

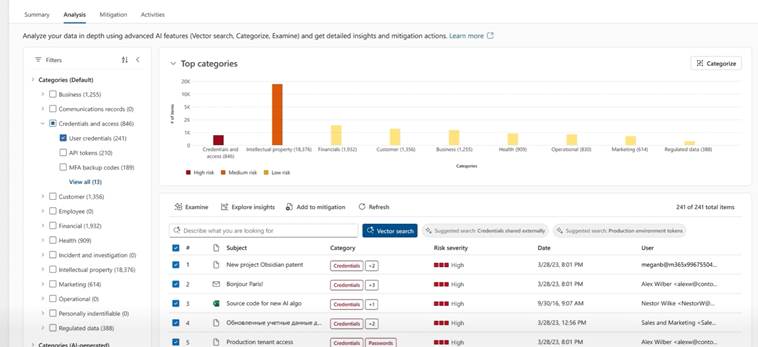

An investigation can be pre-scoped with impacted data, saving security admins loads of time required to process data and pinpoint where major risks exist so they begin to understand a security incident, explained Rudra Mitra, corporate vice president, Microsoft Purview. In a demo, the company illustrated more than 50,000 events being analyzed, and the ability to isolate individual categories of events — using the example of those related to credentials and access.

“With that one click, all the 53,603 items associated with the risky users’ activity are brought right into the investigation,” Mitra explained.

An automatically generated report will show admins a summary, the remaining risks, mitigation steps, and the thought process or methodology behind the assessment that’s been created.

Purview Data Security Investigations provides the ability to visualize correlations between impacted users and their activities so administrators can uncover additional users or new content requiring investigation. The Investigations’ functionality can also be used to proactively search Microsoft 365 or other sources in the corporate data estate to find incident data.

By applying AI to security investigations for its installed base of Purview customers, Microsoft is expanding the toolkit to offload critical manual processes that can be so resource-intensive.

Shadow AI in the Crosshairs

Microsoft execs delivered a new data point into the troubling trend toward Shadow AI and its risks: 78% of users are using their own — not corporate-sanctioned or governed — AI tools, such as consumer GenAI apps, through their web browser.

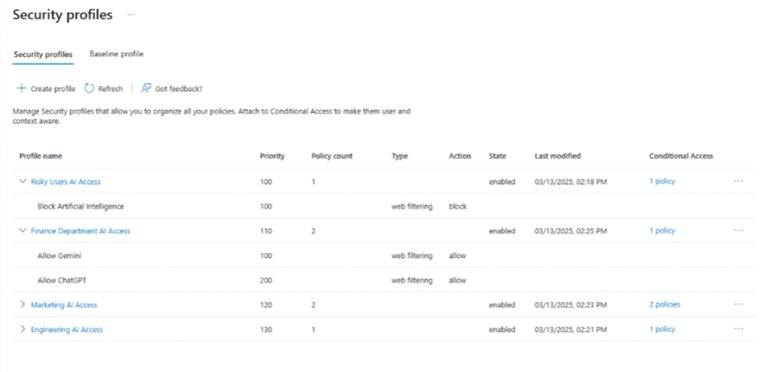

To address this problem, the company is delivering new capabilities in Entra access management and Purview to prevent high-risk access scenarios and data leaks. Microsoft Entra newly provides a web filter for admins to create AI apps access policies based on a user’s identity type and role. “With this, you can have one set of policies for your finance department and another set of policies for your researchers,” said Herain Oberoi, general manager, data and AI security. Those Entra access controls are now generally available.

For Purview, the company is offering new data security controls — now in public preview — on Microsoft Edge for Business whereby the browser can prevent leaks of sensitive information being uploaded or pasted into AI apps through the Edge browser. Sensitive info that’s typed or submitted is detected and blocked in real time. A new AI web filter ensures “risky” users, for example, gain access only to the AI applications they need.

In a demo of this functionality, the company showed how an admin can create new policies, which are configured by default to detect 25 of the most common types of sensitive data. There are also custom classifiers that can flag, for example, any merger- or acquisition-related keywords to curb Shadow IT and prevent leaks of highly sensitive data into unsanctioned AI apps.

Protecting AI Services

In its online event, Microsoft emphasized the importance of protecting not only data within AI apps, but also AI services hosted in cloud infrastructure. New Microsoft Defender functions are intended to lock these down these services. The new functions empower security teams to identify, prioritize, and mitigate risks, with near real-time cloud detection and response to safeguard AI applications, explained Rob Lefferts, corporate vice president, threat protection.

To do so, Microsoft is expanding AI Security Posture Management functionality in Microsoft Defender beyond the current support of Azure Open AI, Azure Machine Learning, and AWS Bedrock. With the latest enhancements, Defender will now support Google Vertex AI models in May. Support for all models in the Azure AI foundry model catalog — including Meta Llama, Mistral, Deep seek and custom models — is generally available. This expansion provides a unified approach to managing AI security risks across multi-cloud and multi-model environments.

Security Copilot Proof Point

Finally, Microsoft customer St. Luke’s University Health Network shared core benefits it’s realizing through use of Microsoft Security Copilot. Security Copilot overcomes the need to gather data from multiple dashboards, helps pinpoint the most important data, and brings team members up to speed quickly on incidents.

“Security Copilot integrates with Microsoft Defender and Microsoft Sentinel, and it sends data back and forth and gives us better context with the alerts that we are given,” said Krista Arndt, associate Chief Information Security Officer.

Added David Finkelstein, chief information security officer at St. Luke’s, “Security Copilot is giving us the ability to see that information and then build road maps and build strategies behind how we fill those gaps to make things easier and more efficient for us…It’s almost like an extra person.”

AI Agent & Copilot Summit is an AI-first event to define opportunities, impact, and outcomes with Microsoft Copilot and agents. Building on its 2025 success, the 2026 event takes place March 17-19 in San Diego. Get more details.