As the cybersecurity industry continues to push artificial intelligence (AI), practitioners race to keep up with businesses’ explosive adoption rates. Security is seeking to maintain the delicate balance between being a business enabler, implementing sound governance, and empowering businesses to make risk-informed decisions around technology use and integration.

Luckily, several resources are coming from industry leaders such as the Cloud Security Alliance (CSA), Open Web Application Security Project (OWASP), and now Databricks regarding AI security. In this analysis, I will take a high-level look at Databricks’ recently released whitepaper, the Databricks AI Security Framework (DASF), and explain its key takeaways for cybersecurity professionals.

Ask Cloud Wars AI Agent about this analysis

Model Types

The paper opens by defining the three broad types of models used in AI: predictive machine learning (ML) models, state-of-the-art open models, and external models. Predictive models include PyTorch and HuggingFace and state-of-the-art open models include Llama-2-70b-chat. State-of-the-art open models are foundational to large language models (LLMs) and used for fine-tuning them. Lastly, external models, or third-party services, include foundation models such as OpenAI GPT, Anthropic, and others.

AI System Components and Their Risks

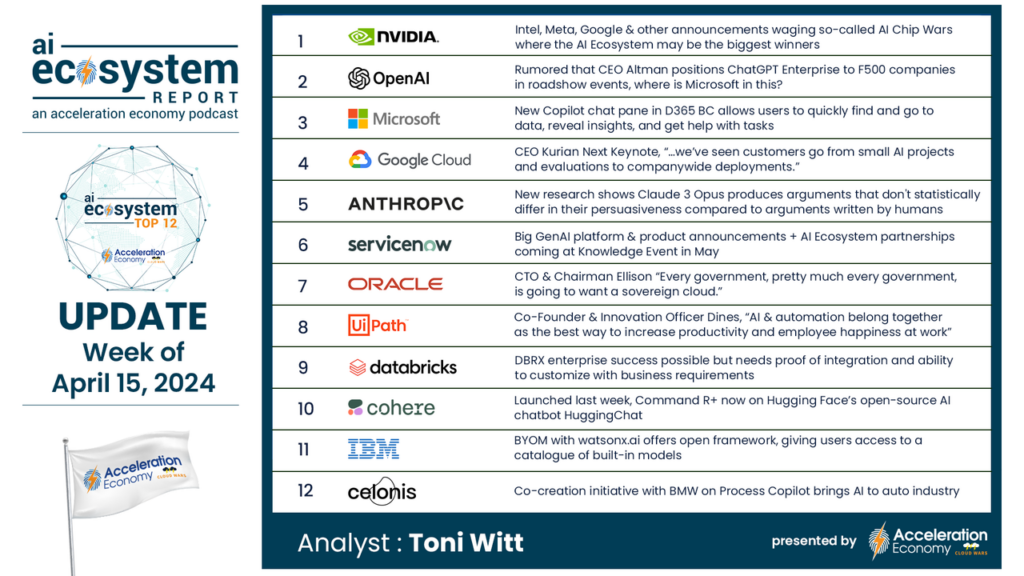

The paper discusses the common AI system components and their associated risks, which include raw data, data prep and cataloging, developing, evaluating, and managing models, and performing inference requests and responses.

The paper catalogs the risks associated with each aspect of the AI system components. It lays out 55 different technical security risks across 12 components commonly deployed by their customers. Each system component is associated with potential security risks and system stages, which align with the diagram above.

Let’s briefly touch on the various components, their risks, and the stage of the software development lifecycle (SDLC) they would be part of. Since the components are organized according to the stage of the system, I’ll use that to orient the discussion and cover the associated components and risks therein.

Data Operations

The first system stage identified is Data Operations. This includes the following components:

- Raw Data

- Data Preparation

- Datasets

- Catalog and Governance

This system stage boasts the highest number of potential security risks, including insufficient access controls, poor data quality, ineffective encryption, lack of logging, and data poisoning among many others. This stage involves raw data, which the DASF emphasizes is the foundation that all AI functionality builds upon. If the data is compromised in any fashion, it has downstream consequences on all other aspects of AI systems and outputs. There also needs to be controls in place to securely handle data preparation, the proper safeguarding of datasets, and the proper cataloging and governing of the broader data operations.

Model Operations

Model operations is the next system stage identified, which includes:

- ML Algorithm

- Evaluation

- Model Build

- Model Management

The DASF identifies 15 risks that apply to model operations, including model drift, malicious libraries, ML supply chain vulnerabilities, and model theft. Any nefarious activities impacting a model can impact outputs. There are also supply chain security concerns, such as the potential for malicious libraries that can impact systems, data, and models. Given the widespread use of third-party libraries and open source, this risk is of particular concern.

The DASF also calls out the significant investment required to train ML systems, especially LLMs. Facing model theft can be a significant risk, erode competitive advantage, and squander resource investments. This risk makes it clear how necessary it is to properly secure ML algorithms, the model building and training process, and also the management of models themselves.

The model deployment and serving stage includes:

- Model Serving Inference Requests

- Model Serving Inference Responses

Risks called out at this stage include prompt injection, model breakouts, LLM hallucinations and accidental exposure of unauthorized data to models. Prompt injection is a particular attack technique that has gained a lot of visibility. It involves users injecting text aimed at altering the behavior of an LLM and can be used to bypass safeguards and cause damage. Hallucinations are another risk that has been in the headlines, due to LLMs generating incorrect or false outputs or even leaking sensitive data that wasn’t intended to be shared. Lastly, the risk of exposure of unauthorized data to models is catching attention, as LLMs and GenAI become a key part of modern organizational infrastructure and software environments accidental exposure of data to models can pose significant risks to organizations impacting proprietary and confidential data.

Operations and Platform

Rounding out the system stages identified in DSAF is Operations and Platform. The components identified here include:

- ML Operations

- ML Platform

Various risks impact this stage, such as a lack of repeatable standards, poor vulnerability management, a lack of penetration testing and incident response, subpar SDLC, and a lack of compliance. Many of these risks apply to many digital enterprise environments and apply to broader application security and governance as well. Additionally, the specific mention of a lack of compliance highlights the increasingly tense intersection between the prolific growth of AI and increased pushes for regulatory compliance.

Moving Forward

AI adoption continues to grow rapidly, and the DASF represents an excellent resource that diverse stakeholders across organizations can leverage to ensure a secure and compliant approach to AI adoption. Databricks is a pioneer in this space, leading with its AI security risk workshops, thought leadership, and exceptional platform to help organizations securely use AI.