As I discussed in a recent AI/Hyperautomation Minute, I consider customer trust to be an up-and-coming factor for success when it comes to providing AI-powered products and services. While performance has dominated the conversation around AI in the past, with demonstrations of algorithms beating human chess players and talk of ever-increasing parameter counts, I think the tide is turning in favor of trust as AI moves into consumer markets and gains mainstream awareness.

Buying decisions will be made not just on the raw performance of an AI system but increasingly based on other factors — and, in particular, trust. This makes sense, given that more vital processes — from mortgage lending to healthcare checkups to hiring — are being automated with AI. Going forward, there has to be zero tolerance for bias, errors, or leaks in these critical systems.

However, most organizations still aren’t assigning top priority to trust-building activities like bias mitigation, ethical development, safety, and security. With their sights set on maximizing performance, your organization has an opportunity to focus on these factors for competitive differentiation. This analysis dives into one way of doing that: using AI frameworks.

The Role of Frameworks in AI Development

It’s clear that building an AI system or embedding the technology into existing operations isn’t a box that your PR or innovation team is urging you to check. AI is here to stay and organizations that don’t use automation technologies will struggle to maintain a competitive edge in the Acceleration Economy.

As such, it’s necessary to have a set of guiding principles that help you along the way. A good framework leans on the unique values and aspirations of your company while also providing a clear lens through which to make day-to-day decisions. It keeps everyone on the same page and ensures AI integrates well into the entire organization, not just certain departments. It also helps you maintain compliance and be transparent with the public, which builds trust!

Now I’d like to share three examples of organizations using AI frameworks and how they’re helping those firms.

Pfizer Implements ‘Responsible AI Principles’

There are plenty of opportunities for automation in the healthcare industry. Since the start of the pandemic, there has been an increased need to reduce workload for healthcare workers. Recognizing this, Pfizer has been leading the industry forward in its application of AI.

Part of Pfizer’s effort was creating its own framework for responsible AI development — the Responsible AI Principles — based on fairness, empowering humans, privacy, transparency, and ensuring accountability of its AI systems.

These principles consist of inspiring language that can help other companies clarify their own responsible AI objectives. This is partly because Pfizer has grown in an industry where real people — patients — are the top priority. Further, building trust is paramount to delivering quality healthcare services.

In order to stay true to these principles, Pfizer worked with Dataiku, a company on the Acceleration Economy Top 10 Shortlist of AI/Hyperautomation enablers. Together, the partners built and deployed an AI Ethics Toolkit that conducts bias detection, bias mitigation, and model transparency.

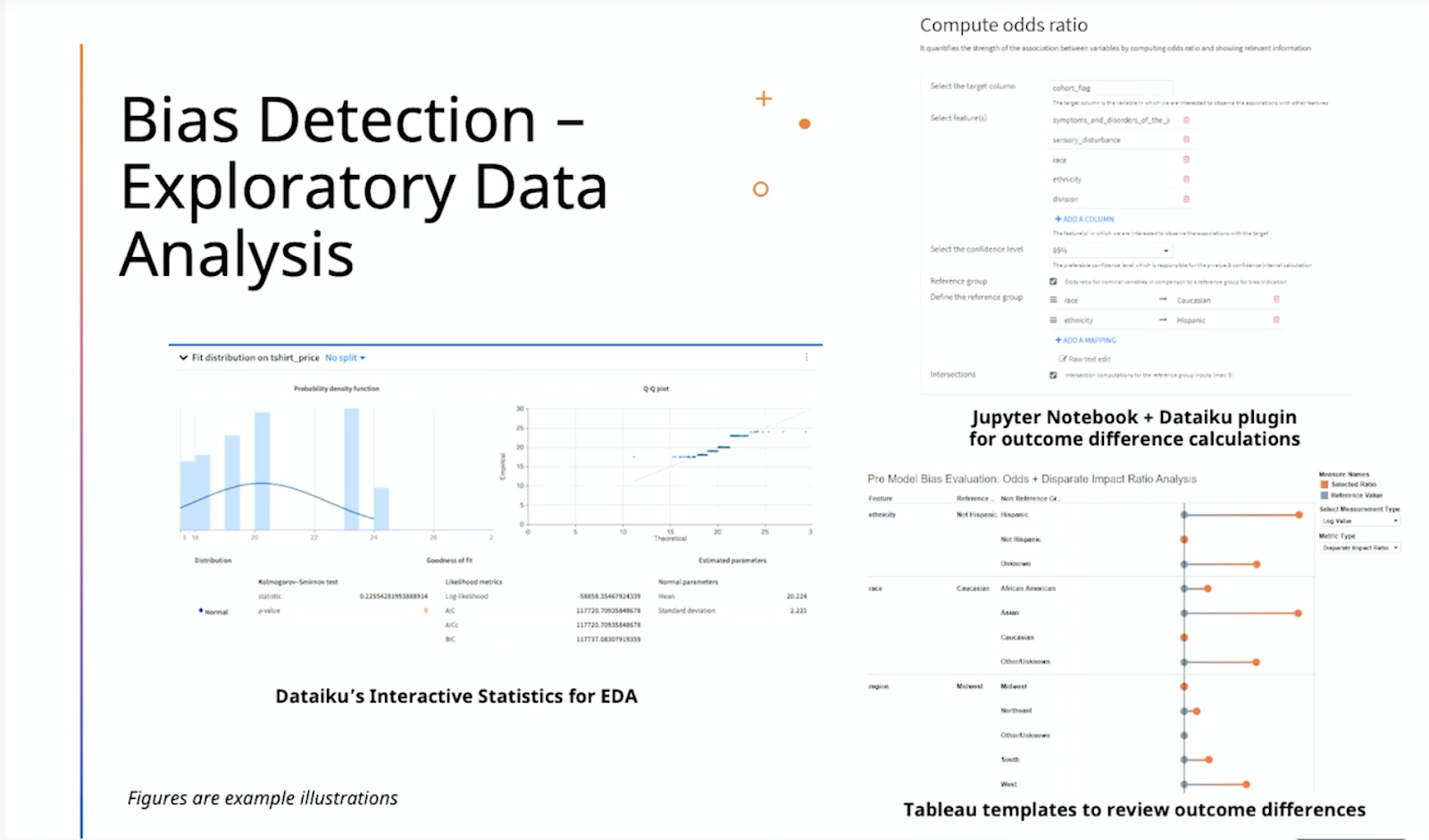

Pfizer’s AI team leveraged Dataiku’s Interactive Statistics for EDA (Exploratory Data Analysis) tool to explore data sets, detect biases, and improve the data before even training a model.

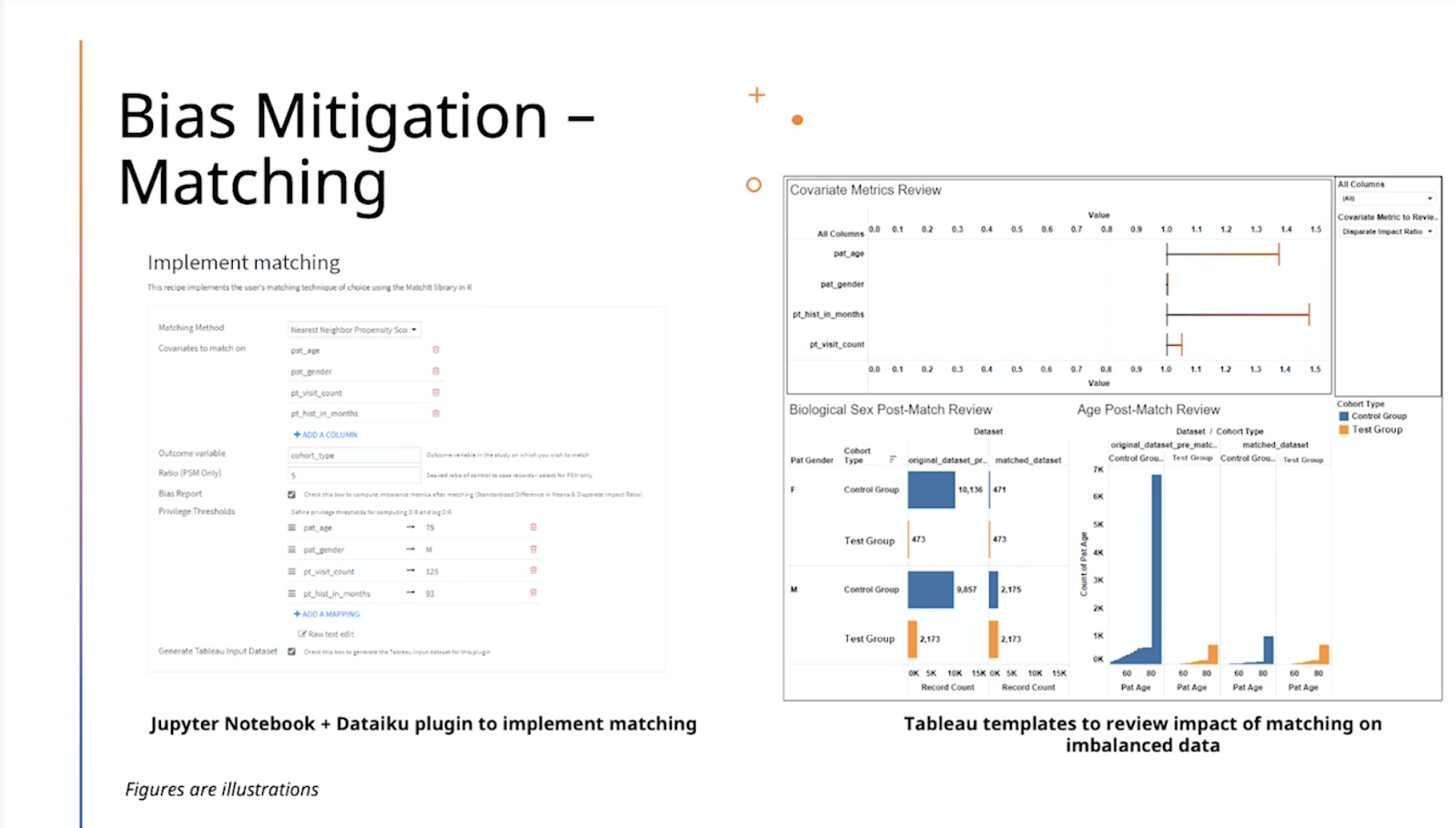

Using Dataiku’s visual dashboards and plug-ins, Pfizer was able to mitigate bias after it was detected. The company relied on a technique drawn from traditional social science known as ‘matching,’ or minimizing differences in how subsegments of a population receive treatments to mimic randomized control experiments.

To ensure model transparency and explainability, the Pfizer team developed a list of questions to ask before and after developing a model. For more on this approach, check out Dataiku’s overview of the partnership.

Ultimately, Pfizer was able to answer questions posed about its models based on data made visible through Dataiku’s dashboards and plug-ins. The guidance provided by Dataiku’s team helped Pfizer’s AI team deploy AI systems in line with its responsible AI principles.

Which companies are the most important vendors in AI and hyperautomation? Check out the Acceleration Economy AI/Hyperautomation Top 10 Shortlist.

Partnering with one of the Acceleration Economy AI/Hyperautomation Top 10 companies is a great way to stay true to whichever framework you adopt, regardless of your industry. As a startup founder, I’ve learned that you will not reach lofty goals alone. Outsourcing and bringing in capable partners is critical, especially if your company doesn’t have experience operating in the AI world. There could be many variables you don’t see, leading to missed opportunities to recognize and work toward your company’s mission and values. The end cost of these misses isn’t just monetary; faulty AI systems can be harmful to the lives of others.

Rolls-Royce’s Aletheia Framework

Motivated by its own need to increase trust in the outcomes of its AI algorithms, Rolls-Royce released the Aletheia Framework to the public so that other organizations can leverage it to their benefit and hopefully ensure a better future for everyone, with the intention of “[moving] the AI ethics conversation forwards from discussing concepts and guidelines to accelerating the process of applying it ethically,” according to then-CEO Warren East.

The framework contains a list of 32 actionable steps, all based on three core pillars:

- Positive social impact on all stakeholders

- Accuracy and trust through mitigating bias and increasing validity

- Proper governance through protocols and checks

These pillars are useful for guiding your framework because they can help you avoid the common pitfall of focusing only on certain trust-building processes like bias mitigation, which has had extensive media coverage in recent years. Rather, it enables you to consider the broader impact on all stakeholders. Plus, the final pillar — proper governance — is necessary for putting ideals into practice. A framework is useless without this component.

Deloitte’s ‘Trustworthy AI Framework’

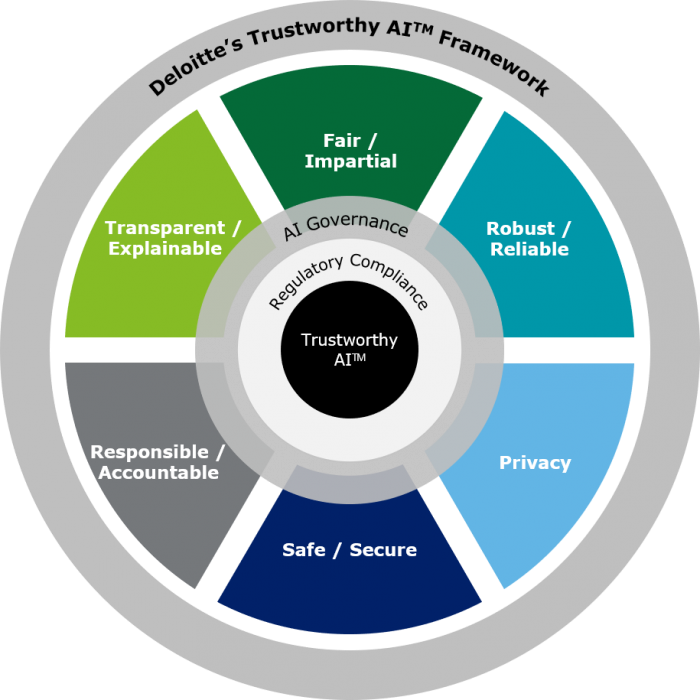

Deloitte developed a framework that outlines the core values that lead to trust in an AI system. Of course, regulatory compliance is at the heart, but buyers will also care about elements including fairness, reliability and robustness, privacy, safety and security, accountability, and transparency.

This framework enables Deloitte to maintain compliance and be transparent with customers. Aiming to build trust in their AI systems, other companies can apply the elements of this framework to guide them in meeting the expectations of customers and regulatory compliance requirements.

Government’s Role

I believe governments will need to play a role in the regulation of AI in the coming years. Left alone, companies might not hold themselves fully accountable. By nature, they could prioritize profits and go-to-market speed over safety and long-term societal impact. Regulators must defend the latter, yet it is becoming increasingly difficult to do so with the accelerating pace of AI development.

When AI systems contain bias against certain demographics, it leads to the production of harmful outputs. Additionally, it runs the risk of eluding human control in what is sometimes called “runaway AI.”

For instance, OpenAI has already started trials to make GPT-4 learn recursively and act on its text outputs by giving it compute resources and access to capital. This is the breeding ground for superintelligence — and there are no regulatory boundaries for protection. There is still significant ambiguity in many cases involving ethics and AI.

If you disagree with the view that government must be involved in AI, consider this example: if a financial advisory firm with fiduciary responsibility to clients builds an AI tool advising those clients on certain investments, and the underlying security declines in value and the client sues, who is held responsible? The AI developers? The client? The CEO of the financial firm? This is just one of many possible scenarios that will need to be addressed in a regulatory context.

Governments can get some inspiration from the EU and its AI Ethics Guidelines which, among many other important principles, continue the EU’s emphasis on consumer protection it established with the GDPR.

Conclusion

Trust in your AI systems is not just a nice-to-have; it’s a competitive advantage. To build trust, organizations must detect bias in their models early on, mitigate it where possible, and take factors like security, reliability, and ethics seriously. AI frameworks can help keep your organization aligned on these values throughout the development pipeline and bringing you proactively down the path of building and maintaining trust in your AI models.

Looking for real-world insights into artificial intelligence and hyperautomation? Subscribe to the AI and Hyperautomation channel: