The usage of apps such as ChatGPT by the workforce is growing. Powered by large language models (LLM) like GPT-3.5 and GPT-4, the generative AI chatbot is being leveraged by employees in countless ways to create code snippets, articles, documentation, social posts, content summaries, and more. However, as we’ve witnessed, ChatGPT presents security dilemmas and ethical concerns, making business leaders uneasy.

Generative AI is still relatively untested and full of potential security concerns, such as leaking sensitive information or company secrets. ChatGPT has also been on the hot seat for revealing user prompts and “hallucinating” false information. Due to these incidents, a handful of corporations have outright banned its use or sought to govern it with rigid policies.

Below, we’ll consider the security implications of using ChatGPT and cover the emerging security and privacy concerns around LLMs and LLM-based apps. We’ll outline why some organizations are banning these tools while others are going all in on them, and we’ll consider the best course of action to balance this newfound “intelligence” with the security oversight it deserves.

Emerging ChatGPT Security Concerns

The first emerging concern around ChatGPT is the leakage of sensitive information. A report from Cyberhaven found 6.5% of employees have pasted company data into ChatGPT. Sensitive data makes up 11% of what employees paste into ChatGPT — this could include confidential information, intellectual property, client data, source code, financials, or regulated information. Depending on the use case, this may be breaking geographic or industry data privacy regulations.

For example, three separate engineers at Samsung recently shared sensitive corporate information with the AI bot to find errors in semiconductor code, optimize Samsung equipment code, and summarize meeting notes. But divulging trade secrets with an LLM-based tool is highly risky since it might use your inputs to retrain the algorithm and include them verbatim in future responses. Due to fears about how generative AI could negatively impact the financial industry, JPMorgan temporarily restricted employee use of ChatGPT, and that was followed by similar actions from Goldman Sachs and Citi.

ChatGPT also experienced a significant bug that leaked user conversation histories. The privacy breach was so alarming that it prompted Italy to outright ban the tool while it investigates possible data privacy violations. The app’s ability to recall specific pieces of information and usernames is another great concern for privacy.

Furthermore, since ChatGPT has scoured the public web, its outputs may include intellectual property from third-party sources. We know this because it turns out that it’s pretty easy to track down the exact source used in the model creation. Known as a training data extraction attack, this is when you query the language model to recover individual training examples, explains a Cornell University paper. In addition to the skimming of training data, the tool’s ability to recall specific pieces of information and usernames is another great concern for privacy.

To hear practitioner and platform insights on how solutions such as ChatGPT will impact the future of work, customer experience, data strategy, and cybersecurity, make sure to register for your on-demand pass to Acceleration Economy’s Generative AI Digital Summit.

Recommendations to Secure ChatGPT Usage

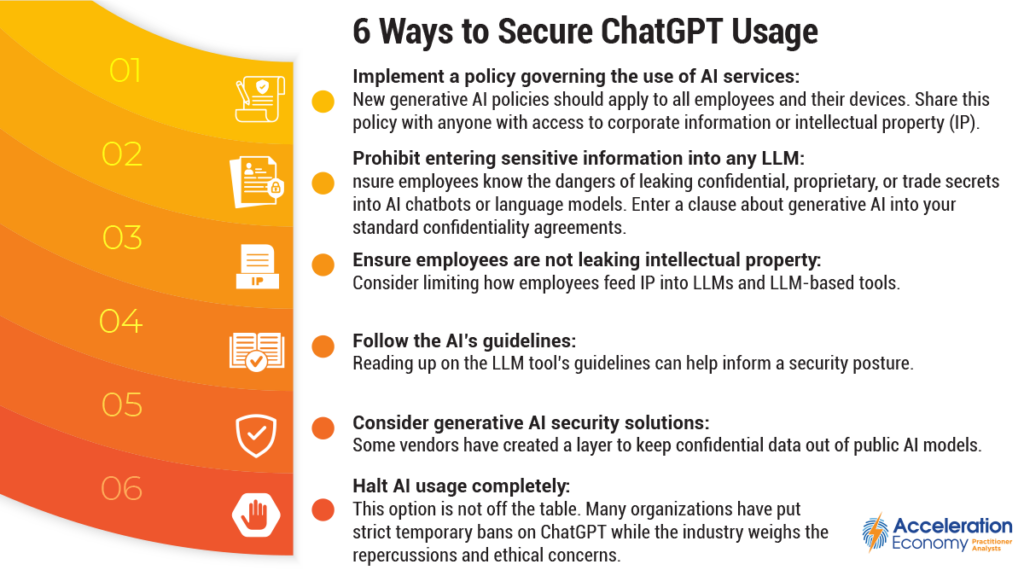

A handful of large corporations, including Amazon, Microsoft, and Walmart, have issued warnings to employees regarding the use of LLM-based apps. But even small-to-medium enterprises have a role in protecting their employee’s usage of potentially harmful tools. So, how can leaders respond to the new barrage of ChatGPT-prompted security problems? Well, here are some tactics for executives to consider:

- Implement a policy governing the use of AI services: New generative AI policies should apply to all employees and their devices, whether on-premises or remote workers. Share this policy with anyone with access to corporate information or intellectual property (IP), including employees, contractors, and partners.

- Prohibit entering sensitive information into any LLM: Ensure employees know the dangers of leaking confidential, proprietary, or trade secrets into AI chatbots or language models. This includes personal identifiable information (PII) too. Enter a clause about generative AI into your standard confidentiality agreements.

- Ensure employees are not leaking intellectual property: As with clearly sensitive information, consider also limiting how employees feed IP into LLMs and LLM-based tools. This might include designs, blog posts, documentation, or other internal resources that are not intended to be published on the Web.

- Follow the AI’s guidelines: Reading up on the LLM tool’s guidelines can help inform a security posture. For example, the ChatGPT creator OpenAI’s user guide for the tool clearly states: “We are not able to delete specific prompts from your history. Please don’t share any sensitive information in your conversations.”

- Consider generative AI security solutions: Vendors like Cyberhaven have created a layer to keep confidential data out of public AI models. Of course, this may be overkill — simply communicating your company policy may be enough to prevent misuse.

- Halt AI usage completely: This option is not off the table. Many organizations have put strict temporary bans on ChatGPT while the industry weighs the repercussions and ethical concerns. For example, in an open letter, Elon Musk and other AI experts have asked the industry to pause giant AI experiments for the next six months while society grapples with its repercussions. (Watch what our practitioner analysts had to say on the letter signed by Musk and others).

Which companies are the most important vendors in AI and hyperautomation? Check out the Acceleration Economy AI/Hyperautomation Top 10 Shortlist.

The Move to AI

Looking to the future, the move toward greater AI adoption seems inevitable. Acumen Research and Consulting predicts that by 2030, the global generative AI market will have reached $110.8 billion, growing at 34.3% CAGR. And many businesses are positively integrating generative AI to power application development, customer service functions, research efforts, content creation, and other areas.

Due to its many benefits, disallowing generative AI completely could put an enterprise at a disadvantage. Thus, leaders must carefully consider its adoption and implement new policies to address possible security violations.

This article has been updated since it was originally published on April 23, 2023.

Looking for real-world insights into artificial intelligence and hyperautomation? Subscribe to the AI and Hyperautomation channel: