As rapid AI adoption and experimentation continue, many have begun to think about how the technology might aid cybersecurity. One potential area of impact is vulnerability research and discovery. Currently, many organizations struggle to keep pace with the ever-expanding number of vulnerabilities; backlogs are growing into the hundreds of thousands or even millions. AI could help organizations identify and remediate vulnerabilities before attackers discover and exploit them.

Google is among those optimistic about AI’s potential to address vulnerabilities. It recently published internal research on the topic dubbed “Project Naptime.” In this analysis, I’ll examine the project’s potential impact and its promise for improved vulnerability management.

What Is Project Naptime?

Google announced that it’s been conducting internal research assisted by large language models (LLMs), looking to automate and accelerate vulnerability research, which has historically been be a laborious manual activity.

Its testing and research have involved key principles for success, which included:

- Space for reasoning

- Interactivity

- Specialized tools

- Perfect verification

- Sampling strategy

Google has implemented an architecture that involves AI agent(s), a codebase, and specialized tools resembling a security researcher’s workflow and approach.

Tools involved included a Code Browser, Python, Debugger, Controller, and Reporter. These tools can work in unison to examine a codebase, provide inputs to an application, monitor runtime behavior, and report on the results, such as looking for a program to crash or act in a manner it wasn’t intended to, highlighting potential flaws and vulnerabilities.

This collection of tools and techniques allows the LLM to demonstrate behavior similar to human vulnerability researchers, which can be improved and iterated upon to maximize the LLM’s effectiveness at identifying and reporting potential vulnerabilities while minimizing undesired outcomes such as false positives, which can be costly to sort through and verify.

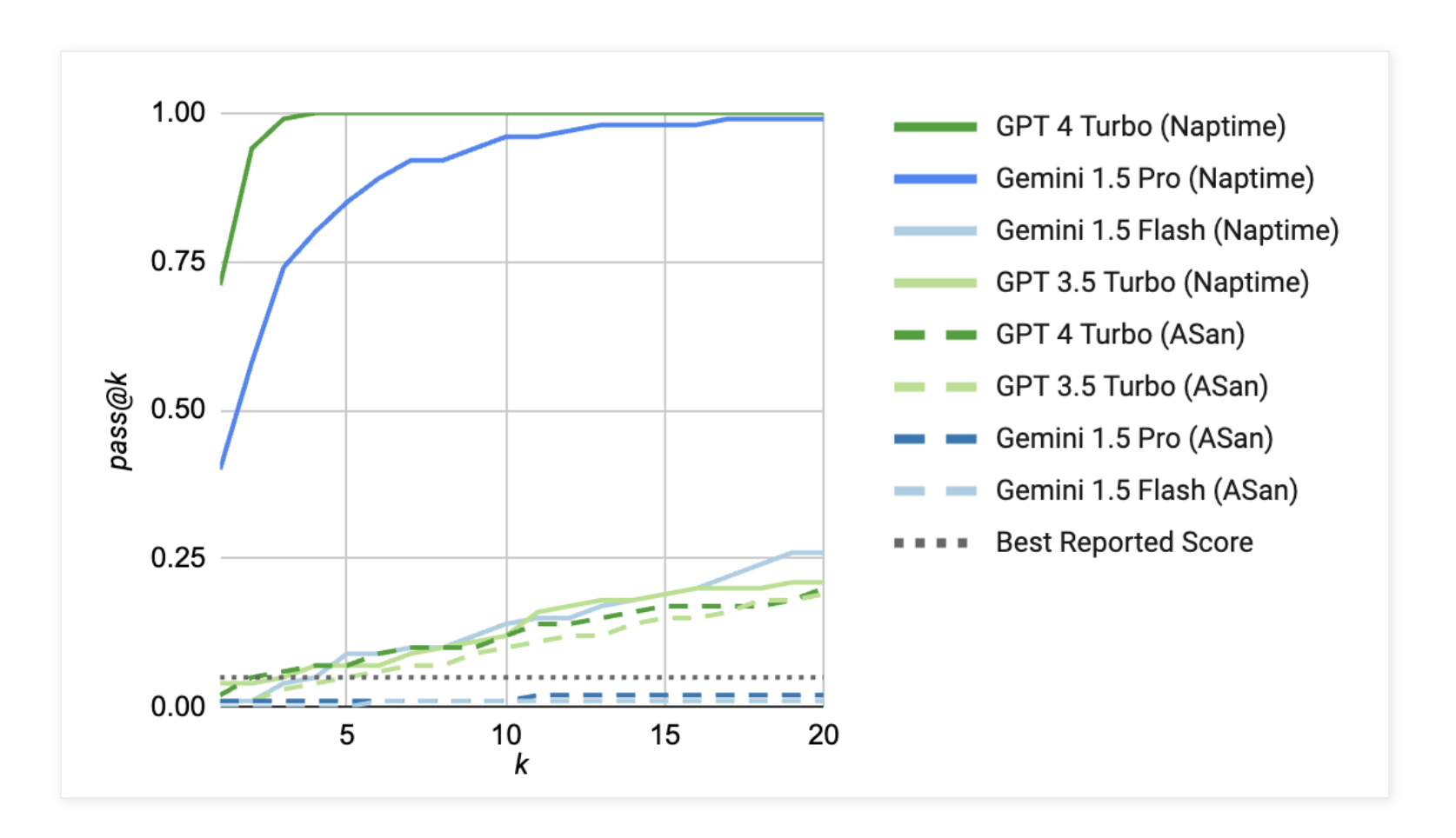

Google used Meta’s CyberSecEval 2 to measure the effectiveness of LLMs when it comes to vulnerability identification and exploitation.

Ask Cloud Wars AI Agent about this analysis

Google’s publication claims to have improved results 20-fold from previous publications of similar research, specifically excelling at vulnerabilities such as buffer overflows and advanced memory corruption.

Google conducted tests on various models to measure their performance and capabilities across different types of vulnerabilities. The image below shows performance vs. a baseline or “best reported score” for several models in identifying buffer overflow vulnerabilities:

What’s the Potential?

Google’s Project Naptime findings are certainly promising. There continue to be many known vulnerabilities captured in databases such as the National Vulnerability Database (NVD) from the National Institute of Standards and Technology (NIST). Reports frequently cite organizations’ inability to keep up with vulnerability discovery and remediation compared to the timelines in which attackers can exploit them. There’s also a push for secure-by-design, and to “shift security left,” that is, earlier in the software development lifecycle (SDLC).

If organizations use LLMs and GenAI to enhance security research, identify vulnerabilities, and prioritize them for remediation before attackers exploit them, this could significantly reduce systemic risk.

This applies to software supply chain security, where attackers target widely distributed commercial products along with open-source software. If LLM-enabled agents mimicking security researchers explore codebases for commercial products and open-source software, they can identify vulnerabilities that can be remediated before attackers exploit them. This could mitigate risks for software consumers across the ecosystem.

In a world where daily headlines are raising concerns about attackers targeting the digital infrastructure supporting consumers and businesses, the ability to use LLMs to quickly identify vulnerabilities for remediation and amplify our human security research capabilities, this research holds a lot of promise.

While it remains to be seen whether these activities can be scaled to augment or replace traditional security research, the early results are intriguing. It’s clear that all available assistance is needed to quickly identify and remediate vulnerabilities to reduce risks to organizations and the digital products they increasingly rely upon.