What does it take to drive a successful AI initiative?

- Software? Yes.

- Partners? Yes.

- Education and technical literacy? Yes.

The widespread availability of these resources and the proliferation of out-of-the-box AI products can make it easy to forget another foundational element of AI applications: hardware.

Most of the hardware currently available to run AI workloads was designed with other functions in mind. As such, although existing options are powerful and capable, new solutions developed natively for AI use cases are required. Recently, IBM announced a new prototype chip architecture that could make AI development and processing faster, cheaper, and more energy efficient.

Chip Design Revolution

For years, Dr. Dharmendra S. Modha, IBM Fellow and IBM Chief Scientist for Brain-inspired Computing, and his team at IBM Research’s lab in Almaden, California, have been working on a chip architecture that could revolutionize how organizations efficiently scale AI hardware systems.

NorthPole is a digital AI chip for neural inference based on the computation pathways of the human brain. It takes a dramatically different approach to standard chip design, where data is shuffled between memory, processing, and other elements.

The prototype built on the True North chip is considerably more energy and space-efficient, has the lowest latency of any existing chip, and is approximately 4,000 times faster than its predecessor.

All the memory for NorthPole is stored on the chip, not separately, making it easy to integrate and capable of carrying out lightning-fast AI inferencing. “It’s an entire network on a chip,” says Modha. “Architecturally, NorthPole blurs the boundary between compute and memory.

“At the level of individual cores, NorthPole appears as memory-near-compute and from outside the chip, at the level of input-output, it appears as an active memory.”

With this unique architecture, NorthPole is limited to pulling data from the memory it contains; it can’t draw on outside sources. Multiple NorthPole chips can be connected to support large neural networks through division. However, IBM researchers see this limitation as a benefit to consumers.

The chip was explicitly designed for AI inferencing, and this means it doesn’t need elaborate cooling systems, making it more adaptable. In terms of AI use cases, the chip has a broad remit.

Much testing has been conducted around computer vision applications, such as image segmentation and video classification. However, it has also performed in other areas, such as NLP and speech recognition.

Regarding real-world use cases, NorthPole’s portability makes it the ideal candidate for AI tasks at the edge. IBM cites examples like autonomous vehicles, satellite monitoring applications, wildlife management, road safety, robotics, and cybersecurity. However, this could well be the tip of the iceberg.

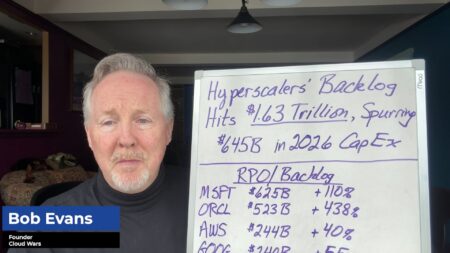

Is Hardware the New AI battleground?

There’s no avoiding the fact that it has taken IBM, one of the world’s foremost computer hardware manufacturers, decades to come to the point where the NorthPole chip could be prototyped. However, this news could spark a race to develop alternative AI-specific chips that cover the core requirements of efficiency, scalability, and speed.

IBM is defining the game’s rules by focusing on NorthPole’s capabilities for AI inferencing, a specific function that means the chip hasn’t been designed with flexibility in mind. Just as we have seen the most significant software and data companies deliver AI solutions with dedicated customers, whether private sector or enterprise organizations, and use cases, like chatbots designed for particular platforms and trained on contextual data, the chip industry could follow suit.

With AI is fast becoming ingrained into the technological fabric of organizations, it seems logical that any future developments will rely on specialization as their key differentiator. Just as there’s no one-size-fits-all CRM, in the future, companies could reasonably look at the nuances of hardware when developing a new AI use case.