Welcome to the AI Ecosystem Report, featuring practitioner analyst and entrepreneur Toni Witt. This series is intended to deliver the timely intelligence about artificial intelligence (AI) you need to get up to speed for an upcoming client engagement or board meeting.

Highlights

Innovation (00:28)

Google released a new AI model, making robots more useful especially for future industry uses. The Robotics Transformer 2 (RT-2) model is a first-of-its-kind vision language action (VLA) model, as it’s trained on text and images pulled from the web. It outputs direct robotic actions.

Because robotics has been a field with many limitations, it has made it challenging to create more general-purpose robots. It’s a very time-consuming process that is ultimately not feasible, with real situations being extremely variable and even with unforeseen circumstances.

So, Google built a single AI model that conducts high-level reasoning and translates it directly into low-level instructions that a robot can execute. RT-2 can take web data that it was trained on to provide these outputs. RT-2 is a transformer model which is a class of machine learning models that can transfer learned concerts from training to unforeseen scenarios.

Funding (04:31)

There is a need for innovation in AI hardware. Startups have been rising to meet the demand for chips needed for AI training and AI inference. Kneron is developing AI chips to power self-driving cars. A few years ago, it raised $49 million in extension to its Series B round from various investors.

Kneron started building application-specific integrated circuits (ASIC), which are chips built custom for just one workflow. While these chips are highly efficient for that one use case, they can’t do anything else. Kneron is taking a different approach by building a low-powered reconfigurable AI chip designed to interface within existing IoT systems.

While other companies are also working on custom chips, Kneron is unique because its chips are relatively lightweight and flexible; they’re more reconfigurable than regular ASICs. They can also reduce latency.

Solution of the Week (07:33)

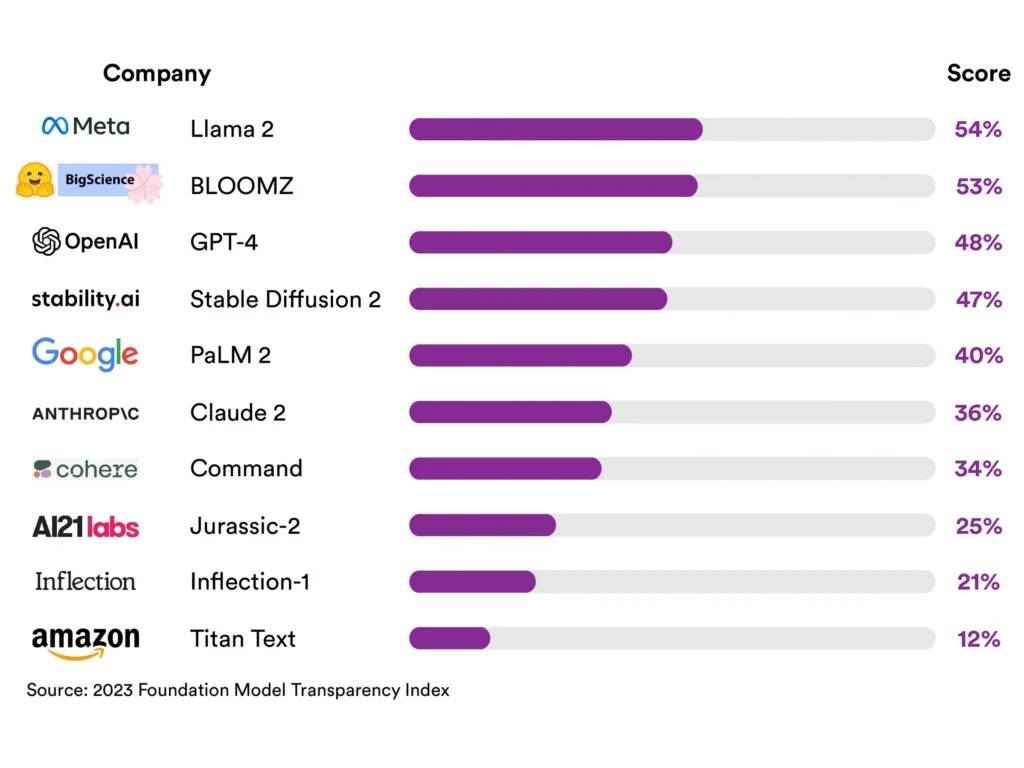

A study recently came out about the top large language models and their lack of transparency. Companies looking to build AI solutions into their operations must consider the ethics of how they leverage AI.

Stanford released research on the transparency indices of the top 10 large language models (LLM). It used a combination of 100 different metrics and variables indicating transparency.

The highest score went to Meta Llamba 2 with 54/100. Notably, none of the models are reaching the transparency levels that they should be, as none of them have a “passing grade” if you put it in terms of what a passing grade is for schools.

This research will help regulators, buyers, and sellers of AI technology. Having this information will boost accountability as well as improve buying decisions. More research will need to be done in terms of safety and transparency, especially as AI is weaved further into daily lives.