The U.S. National Institute of Standards and Technology (NIST) has long been an industry leader in providing guidance, frameworks, and best practices for securing the use of technologies. Its role in the AI ecosystem is proving no different.

In October 2023, the White House issued an executive order (EO) titled “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” or in short, the AI EO. The EO laid out robust visions for ensuring AI safety and security, including tasking NIST with “Developing Guides, Standards, and Best Practices for AI Safety and Security.”

NIST has done just that, publishing a series of resources aimed at this requirement, among them the AI Risk Management Framework (AI RMF), building on its Secure Software Development Framework (SSDF) and the AI RMF GenAI Profile. These resources are aimed at helping organizations govern and manage AI risks and provide vendor-agnostic comprehensive guidance for organizations to aid the business in secure AI adoption and usage.

Let’s briefly examine each and how they may be used below.

AI Risk Management Framework (RMF)

The AI RMF is “intended to address risks in the design, development, use, and evaluation of AI products, services, and systems.” This makes it a great resource to help organizations securely adopt AI as businesses rush in to explore AI use cases, utilize external AI services, and build on top of AI foundation models.

AI RMF is oriented around mapping risks, measuring their potential impacts, and then managing those risks appropriately.

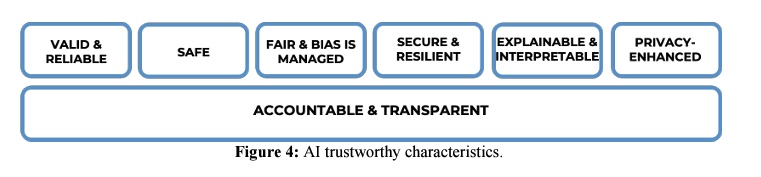

It examines the potential harms of AI use, including harm to people, organizations, systems, and the overall ecosystem. It also emphasizes the need for AI trustworthiness: being valid, reliable, safe, and fair while also ensuring characteristics such as privacy are considered as well.

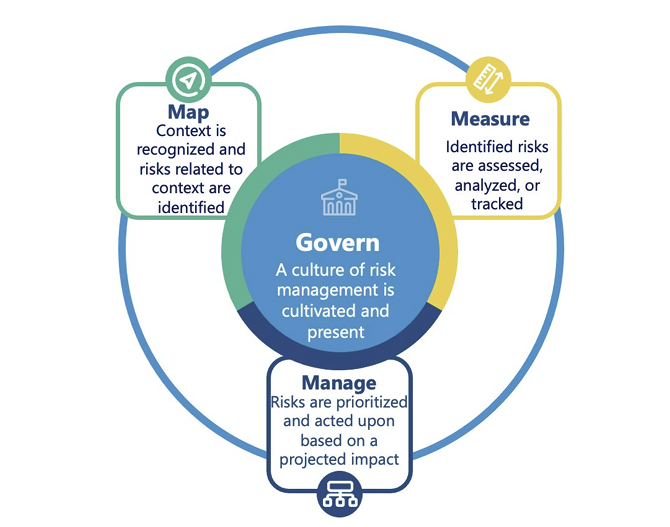

The NIST AI RMF “Core” includes four functions: map, measure, manage, and govern. Each involves categories and subcategories with their own associated risks, considerations, and potential actions organizations can take to mitigate risk.

There are also “Profiles” that align with specific use cases or scenarios.

AI RMF GenAI Profile

As part of the AI RMF, NIST has begun producing “profiles” for specific use cases. It took a similar approach to the widely popular NIST Cybersecurity Framework (CSF), which has profiles for specific industries and organizational use cases.

GenAI and large language models (LLMs) represent some of the fastest-growing areas of adoption within the broader domain of AI. As organizations rapidly adopt GenAI capabilities and services, it is crucial that they build on a sound foundation, and the AI RMF GenAI Profile represents an opportunity to do that.

The AI RMF GenAI Profile covers risks that are either unique to, or exacerbated by, GenAI. These include, but are not limited to, dangerous recommendations, data privacy, human AI configuration, information integrity, and intellectual property (IP) concerns. The profile lays out these key risks, their potential impact to organizations, as well as recommendations for mitigating them. It also lays out a comprehensive table that includes the unique identifier, actions organizations can take to manage the risks, what risks it is tied to, and the relevant AI actors involved.

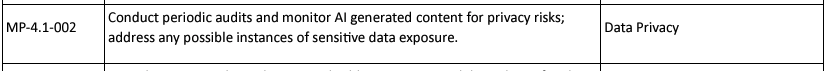

For example, risks associated with information integrity under the govern sub-category may call for actions such as having long-term documentation retention policies for auditing, investigation, and content provenance. Another example, but under the mapping sub-category, involves data privacy. It calls for the organization to conduct periodic audits and monitor AI-generated content for privacy risks or sensitive data exposure.

Below is an example excerpt from the tables, demonstrating one of the risks discussed above:

The AI RMF GenAI Profile includes a comprehensive appendix with key considerations for any organization designing, developing, and using GenAI and looking to manage GenAI risks. It specifically calls out considerations around governance, pre-deployment testing, content provenance, and incident disclosure. The appendix provides fundamental controls, recommendations, and additional resources for addressing these considerations and risks.

Closing Thoughts

The NIST AI RMF and GenAI Profile represent powerful, comprehensive resources for organizations to effect secure AI adoption and usage. It is often said security is “bolted on” rather than “built in,” but for savvy security leaders thinking ahead, leveraging these resources as well as others from organizations such as Open Web Application Security Project (OWASP) and Cloud Security Alliance, leaders can help enable the business with secure AI outcomes.