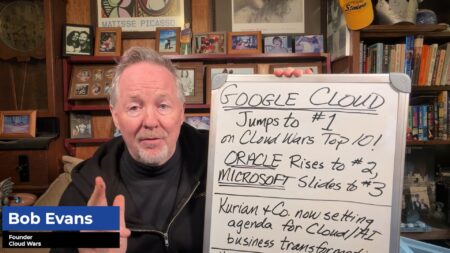

The HIMSS 2023 Global Health Conference and Exhibition, which consisted of in-depth coverage of healthcare and technology ran last week, so it’s an opportune time to review big takeaways.

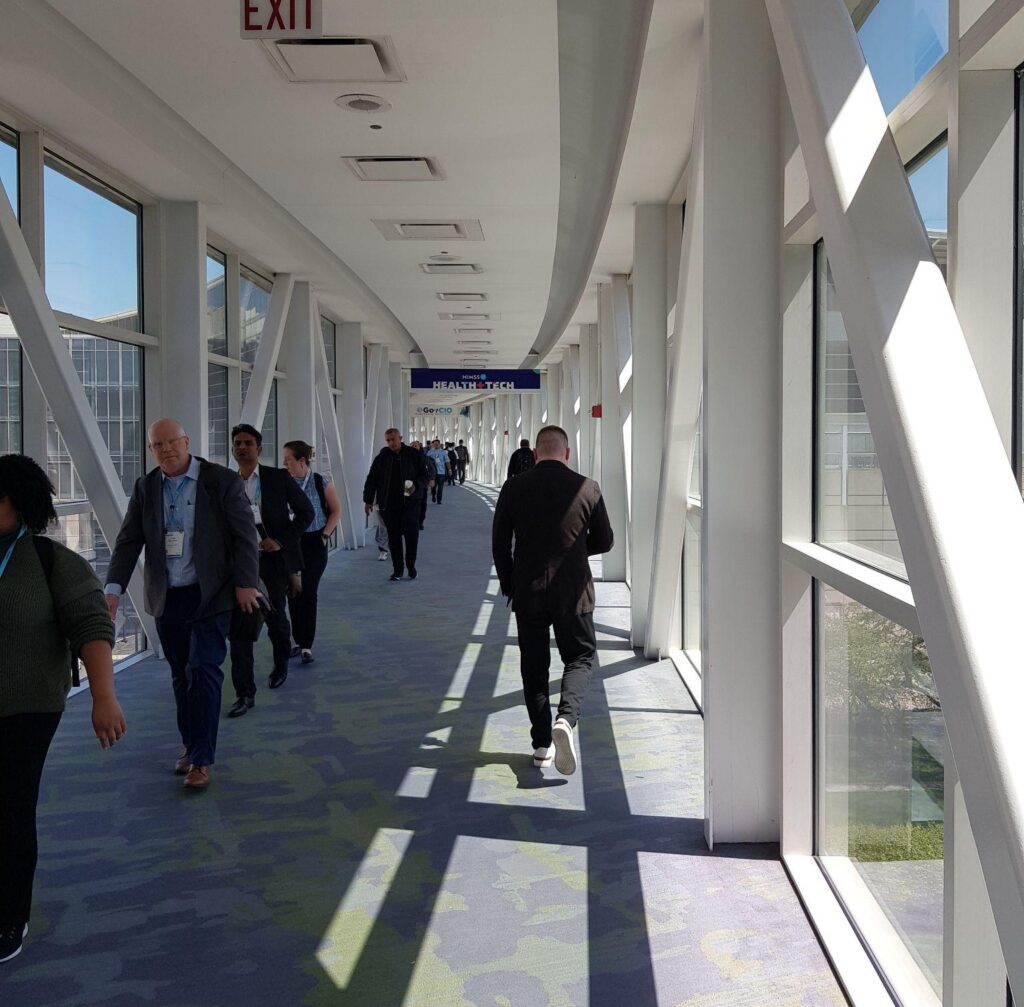

First of all, the scale of the event was impressive, with 40,000 people in attendance. I have never felt an industry so alive as I did at HIMSS. Everywhere you turned, there were conversations, many of them with potential to impact lives across the world.

To hear practitioner and platform insights on how solutions such as ChatGPT will impact the future of work, customer experience, data strategy, and cybersecurity, make sure to register for your on-demand pass to Acceleration Economy’s Generative AI Digital Summit.

The exhibit floor was immense; every booth was packed with interested attendees, demos, and representatives of the exhibitors. There were many companies I had never heard of, pulling the strings in healthcare and making society function in ways I’ve never even considered. There wasn’t a single seat open during the keynote — and the room could probably be refitted into a sports stadium. The dozens of sessions running concurrently put forth many big questions and, to some extent, answered them. The size and scale was humbling.

I also loved seeing the awkward hybrid work going on again as live events pick back up: Professionals in suits on phones and huddled at their laptops, sitting on the floor by power outlet. In the morning, there must have been over 100 people waiting anxiously for caffeine to start their day. Good thing the press/analyst facilities had coffee!

On a more serious note, artificial intelligence (AI) and machine learning (ML) dominated the conversation. I overheard ‘ChatGPT’ mentioned in more hallway conversations than I can count — and rightfully so. It’s become clear that the post-pandemic healthcare industry is in dire need of automation. Nowadays, hundreds of use cases exist in every healthcare organization.

Which companies are the most important vendors in AI and hyperautomation? Check out the Acceleration Economy AI/Hyperautomation Top 10 Shortlist.

This is partly because the technology has improved in recent years. But more importantly, countless companies, including our Acceleration Economy AI/Hyperautomation Top 10 Shortlist vendors, have stepped up to assist healthcare professionals in embedding AI into their operations. Unlike other novel technologies, like blockchain or virtual reality (VR), there was absolutely no doubt of the need to automate and analyze with AI. The question was how best to do so.

This is where the healthcare industry differs from others. When dealing with patient data and human lives, there is an intense focus on governance, responsible AI, explainability, and avoiding the so-called ‘black box problem.’ All of these somewhat buzzy terms boil down to a few key questions: How are models making the decisions? Are they looking at the right things? Are we ethically dealing with patient data? Is our system secure and robust? Are we compliant?

Unfortunately, the startup approach to AI/ML of “Let’s build something and see what happens” doesn’t really work when you’re dealing with real lives. As a result, healthcare organizations need enterprise-level governance of their AI initiatives more than the speed of adoption.

At one of the sessions on AI frameworks, a speaker pointed out the importance of building — and adhering — to such a framework. For two examples, check out the WHO framework and this paper in Nature. The speaker recommended reading into 10 or 20 such frameworks, then recognize the 90% overlap between them and customize the remaining 10% around your organization’s unique values. This framework can then guide the hundreds or thousands of micro-integrations of AI/ML into use cases across your operations.

But the process isn’t done after models are put into production using the framework. In the spirit of agile development, the models as well as the rubric itself must evolve to fit the needs of the time. Technology development is only accelerating and seems to be in an arms race with additional regulations — like the 2023 Data Protection Act, the German AI Cloud Service Compliance law, the Algorithmic Accountability Act, and various GDPR-style proposals — cropping up around the world. Hundreds of papers on responsible AI are released every month. Your governance strategy should keep improving.

The lack of a unified and unchanging framework can’t stop you from building AI into operational processes. One of the sessions described a research project that uses ML to predict asthma hospitalizations in children and successfully prevents almost 200 hospitalizations a year, saving both hospital resources and lives. Not using technology for ends like this counters what the entire healthcare industry stands for. One of the speakers encouraged organizations to use older models if need be and build their own data pipelines instead of relying on Big Tech and OpenAI. The speaker argued that operating ahead of regulation is also acceptable if it is for ethical ends.

Finally, one big question remains for the industry: What functions within the patient care journey should be fully automated, and which should always have humans in the loop? Ultimately, healthcare is about caring for people. Maybe that needs a human touch. But maybe not. I don’t have an answer, but the question deserves to be asked from time to time.

P.S. I heard several speakers say ‘ChatGPT-4.’ If you’re speaking on stage about AI, please recognize there’s a difference between ChatGPT and the transformer model GPT-4. Just saying!

Looking for real-world insights into artificial intelligence and hyperautomation? Subscribe to the AI and Hyperautomation channel: