While the Metaverse is often associated with virtual reality (VR), it is not defined by VR. There’s more to it than that. The Metaverse is also a revolution in media types, user behavior, and other types of devices. Epic Games CEO Tim Sweeney defines the Metaverse as a “real-time, 3D social medium where people can create and engage in shared experiences.”

There’s another close cousin of VR that constructs this real-time, 3D virtual social medium: augmented reality (AR). AR is all about projecting virtual objects directly into the physical world. A good example of existing AR is Pokémon Go and Snapchat filters.

The AR Revolution

Many consider AR to be the next major computing and interfacing revolution that will eventually replace the phone. Instead of pulling a small rectangle out of your pocket to check your messages, you’ll have everything blended directly into your environment with a pair of smart AR glasses. This paradigm shift in how we access digital information will unlock a world of new possibilities.

For example, if you’re walking down the street, your smart AR glasses can tag storefronts with information about the business that is inside. If you’re looking for a place to eat, you can apply a filter and be guided directly to relevant restaurants. Without needing to go inside and look at the menu, you can project some of the establishment’s dishes in front of you to see the portion sizes. There are near endless applications for AR, and many companies are joining the effort in building this next revolution.

Why We Need the AR Cloud

But a core element of a useful AR experience that hasn’t yet been developed is the so-called AR cloud. For the restaurant example to work, a pair of AR glasses needs to understand its surroundings. This understanding includes spatial data, such as distances and surfaces, but also semantic data layered on top of the physical world (this is a fire hydrant; that store is open until 7; the bus you need to take is over there).

Determining everything in a user’s field of view in real-time using computer vision is computationally expensive. So, devices need to rely on a cloud that contains digital information already anchored to real locations. This cloud is also needed to have multiplayer AR experiences where the same virtual object can be represented at the same physical location for multiple users.

For example, if an artist was contracted to make a digital public art installation for a street corner that everyone with headsets can see, it would need to be visible at the same location for everyone. It would also allow everyone to build on the same foundation, providing value to others much like people can build websites for others on the current Internet, thereby leveraging the network effect needed for mass adoption.

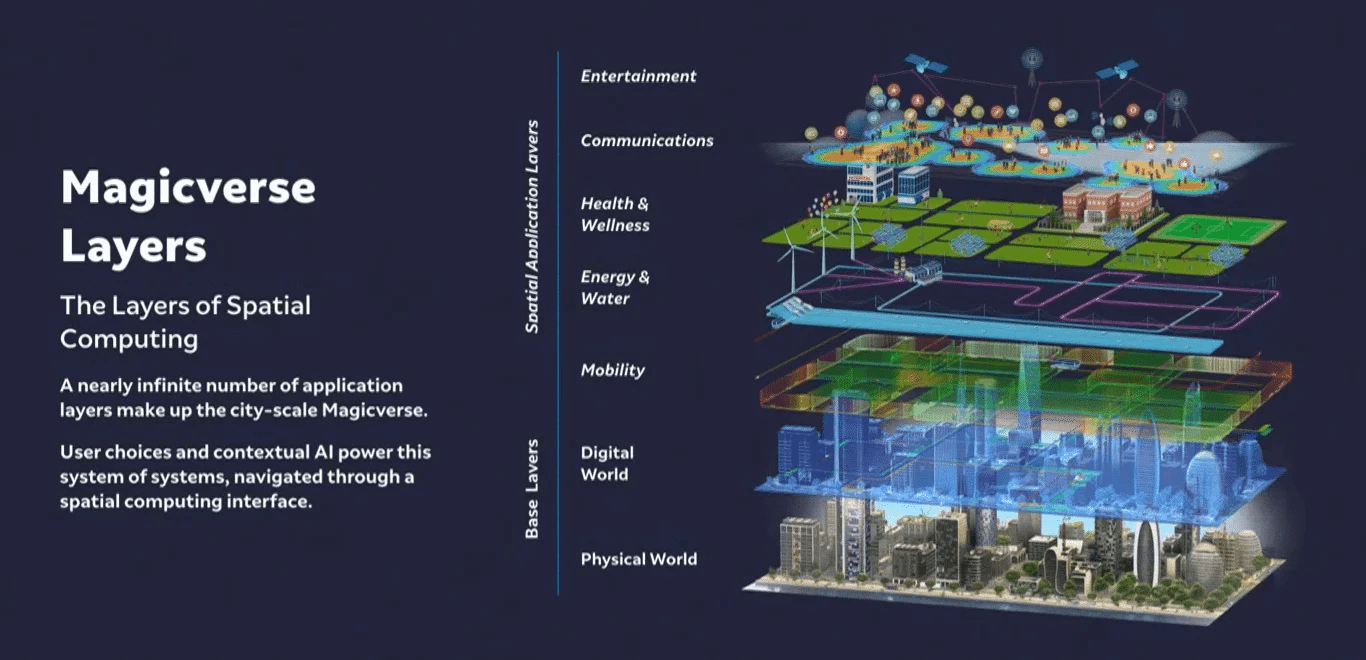

This idea goes by many names, from the “Metavearth” to the Internet of Places, Mirror World to the spatial Internet. But whatever you decide to call it, the big-picture idea is to eventually replicate the real world and all its data sources and sinks in a digital form that devices can interact with, whether that’s head-mounted displays (HMDs) or Internet of Things (IoT) or vehicles. The AR cloud is the next step in our journey towards truly merging physical with digital reality. It’s a key piece in enabling the next major tech revolution, which is augmented reality.

Building the AR Cloud

Building the AR cloud is mostly a challenge of scale. While we already have much of the technology and techniques required, they need to be applied en masse to build a worldwide database. Google is a strong contender in the race to build the AR cloud because of its experience in similar projects like Google Street View, Google Lens, and Google Live View. Google Maps already has the largest collection of business information geo-anchored to real locations.

We do not have AR headsets powerful enough to stream complex 3D assets from such a cloud in real time yet. It’s still very difficult for headsets to determine their precise position and orientation relative to the spatial cloud in real-time.

The Future of the AR Cloud

The AR cloud should not be owned by a single company. Just like the protocol foundations of the Internet or the infrastructure of a city, it is a baseline that everyone else relies on and becomes a part of. Whenever the discussion around building an “open Metaverse” comes up, ensuring an open AR cloud is what comes to my mind. Just as with the current Internet, steps need to be taken to make sure layers at the bottom of the AR stack remain open, decentralized, and interoperable.

The Open AR Cloud Association (OARC), is a nonprofit working across the industry to ensure an open AR cloud. They propose standards for how headsets communicate their location and orientation, how real-world properties are encoded, how access management can work on a location basis, and for generally designing systems for semantics and relationships. If you’re working in the industry or looking to get ahead in the AR revolution, consider supporting initiatives like the OARC.

Want to compete in the Metaverse? Subscribe to the My Metaverse Minute Channel: