It is very often that I debate with colleagues about the fact that big tech companies are giving away so much machine learning software and sophisticated artificial intelligence models as open-source, putting in everybody’s hands such powerful tools. Most of my colleagues and fellow data professionals tend to give too much value to machine learning models and software packages that they can use freely and without restrictions to create their own custom solutions. Some of them use to think about code as the crown jewels that need to be protected at all costs.

This is true for the specific AI-powered application code. However, in machine learning, having access to libraries, like TensorFlow or PyTorch, doesn’t bring you that much closer to achieving what Google or Meta can do with machine learning.

This is the key value of AI-powered applications. A machine learning model is just a mathematical formula developed as a software application. Everybody knows how to add, subtract, divide, and multiply. Not everybody knows how to aggregate fractions, operate with irrational numbers, or how to divide matrices.

So, using the formulas—the machine learning model—is actually not very relevant. What is really valuable is how you use it and which data you apply it to. In other words, your training data is what matters.

Using Training Data

The training data is the raw data used to develop a machine learning model. What is special and important about the training data is that it has been specifically refined and prepared for your business case.

That specifically means that your internal data—often combined with external data—from your own systems—about your customers, market, company products, marketing campaigns, and finance—has been used, merged, combined, aggregated, melted, mashed up, cleaned, polished, staged, prepared, engineered and so much more by a lot of your team members.

This data is truly unique and it provides a lot of insights into your business. This is the one that makes the machine learning model works and adds value to your AI-powered application.

Possible Threats to AI Models

Does it mean that developing an AI-powered application or product is not secured? There are some ways that malicious actors could potentially harm a company based on how ML materials are released. The most concerning threat would be if my competition can copy this new feature—like an AI-powered phone app or web app—and have the advantage of what I have done to differentiate myself in the marketplace.

It is true that accessing the model that has been built and customized is possible. Somehow, doing some reverse engineering is possible to figure out the model and how it was built. It is also possible to inspect the final outcome of the engineering process. However, trying to do something useful with it is remotely possible. In essence, dissecting a machine learning code won’t help you reproduce results. Knowing the model architecture is useful, but most architectures differ from each other incrementally and are only useful and efficient within specific use cases.

Protect Your Models with Training Data

In any case, if the model is still a concern, it is possible to encrypt it, so it is protected.

The key component to be protected is the training data. This is what makes the model unique and feasible for your business case, and leaves open features easy to understand or to guess to any third party. It provides a lot of insights about how your model—therefore your solution—was engineered.

It is highly recommended that training data is well masked and re-engineered to hide attributes of which the model was trained, and also create fake entries.

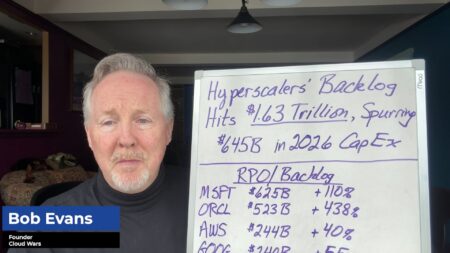

REGISTER TODAY TO EXPERIENCE HOW DATA FUELS THE ACCELERATION OF DECISION-MAKING AT CLOUD WARS EXPO