The Acceleration Economy practitioner analyst team recently has received feedback from buyers that they have confusion regarding the token-based pricing schema of language models. In this analysis, I lay out a brief overview of that schema so that you can make more informed decisions in an age where large language models (LLMs) are becoming ubiquitous and necessary elements of products or operations.

Ultimately, base-level models, whether LLMs like GPT-4 or image generators like DALL-E-2, are priced by computational consumption, just like most cloud services. The biggest difference is the unit of measurement. Before diving into prices there are a few key terms to know:

- Tokens are basic units of text or code that LLMs use to process and generate language. These can be individual characters, parts of words, words, or parts of sentences. These tokens are then assigned numbers which, in turn, are put into a vector that becomes the actual input to the first neural network of the LLM. How the tokens look depends on your tokenization scheme. As a rule of thumb, however, 1,000 tokens is about 750 words in English.

- Tokenization is the act of splitting larger input or output texts into smaller units for LLMs to ‘digest.’ OpenAI and Azure OpenAI use a tokenization scheme called ‘Byte-Pair Encoding’ that merges the most frequently occurring pairs of characters or bytes into a single token.

- A prompt is the text instruction you give to a model.

- Completion means the response of the model.

With any generative AI model, there is always a tradeoff between computational load and performance. Best-in-class models like GPT-4 are extremely powerful but require much more computation than simple models. This is, in part, due to the different tokenization schemes.

Which companies are the most important vendors in AI and hyperautomation? Check out the Acceleration Economy AI/Hyperautomation Top 10 Shortlist.

Case Study: Breaking Down Anthropic’s LLM Pricing

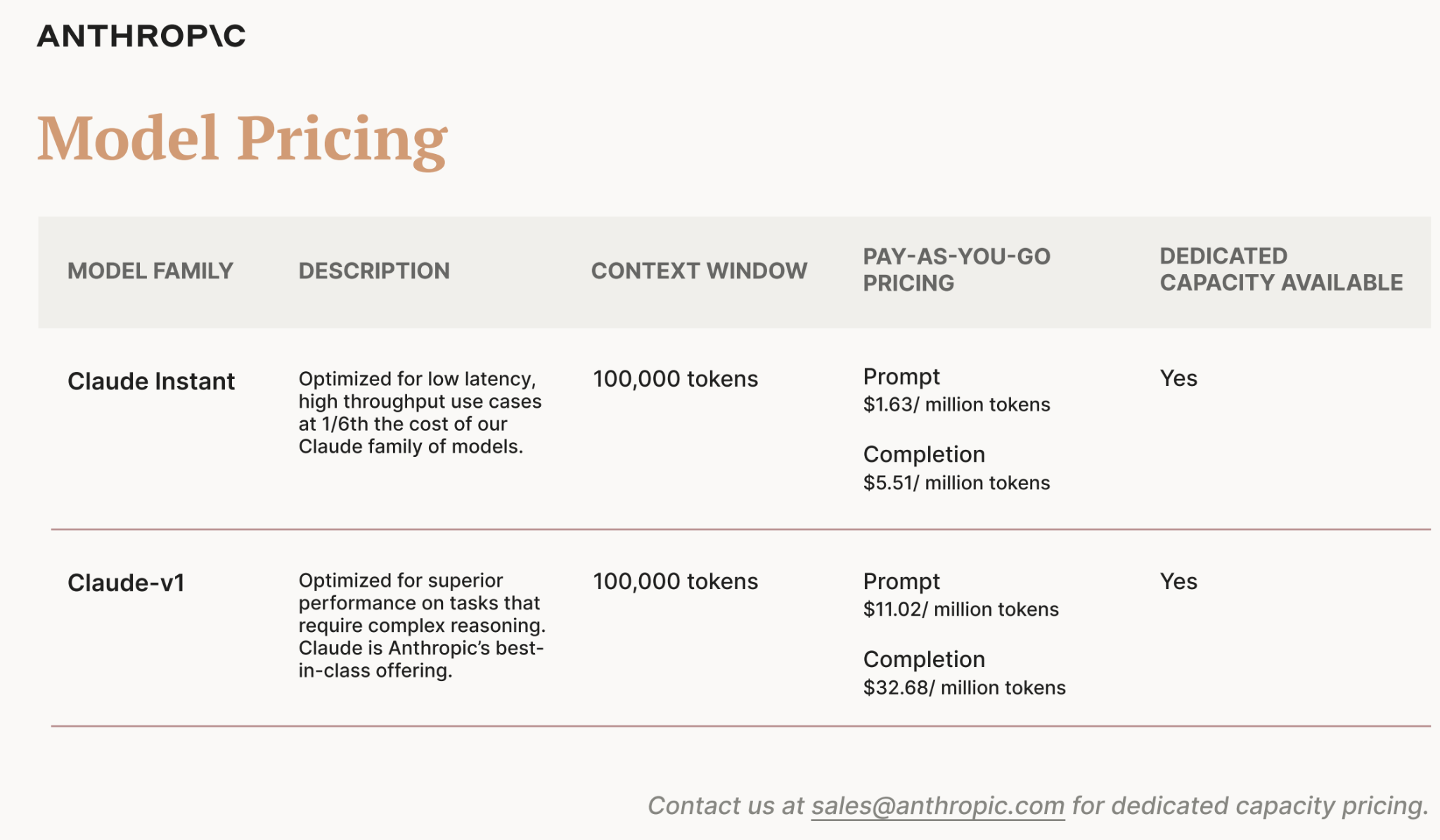

The prices of different models vary greatly. Below is a screenshot from Anthropic, a competitor of OpenAI:

In this example, Anthropic has two different LLMs, Claude Instant and Claude-v1. Their price differs by nearly 10 times, which underscores the point that you need to be very selective about where each model is applied. The best way to see if a model meets your needs is to try it in a test environment first.

In the table, you also see a column for ‘Context Window.’ This indicates how long your prompt can be, measured in tokens. 100,000 tokens equate to about 75,000 words, which is equivalent to a full-length novel. ChatGPT and GPT-3.5 have a context of only a few thousand tokens, a ceiling you will quickly bump into if you copy and paste long texts into the prompting bar. One version of GPT-4, called gpt-4-32k, supports up to 32,768 tokens. This is very high for a model that also has great performance — a combination that makes GPT-4 one of the most expensive options.

You will also notice that Anthropic charges per Prompt and per Completion. This means for every interaction with an LLM, you will be charged for the length of the input you give as well as the length of the output. This is because computation must be done to convert your natural language input into a vector format that an LLM can understand, then run the input through the neural network itself to receive an output.

This double charge is very common across LLM providers, and it’s worth noting that the Prompt charge is always less than the Completion charge. This is because more computation goes into completion than in preparing the prompt for completion.

Case Study: Microsoft OpenAI Service Pricing Breakdown

With Microsoft’s OpenAI Services, you can use OpenAI’s models, including DALL-E and the GPT-n series, right within Azure. Note: ChatGPT and ChatGPT Plus have very different pricing structures; the numbers in this section are for direct API calls.

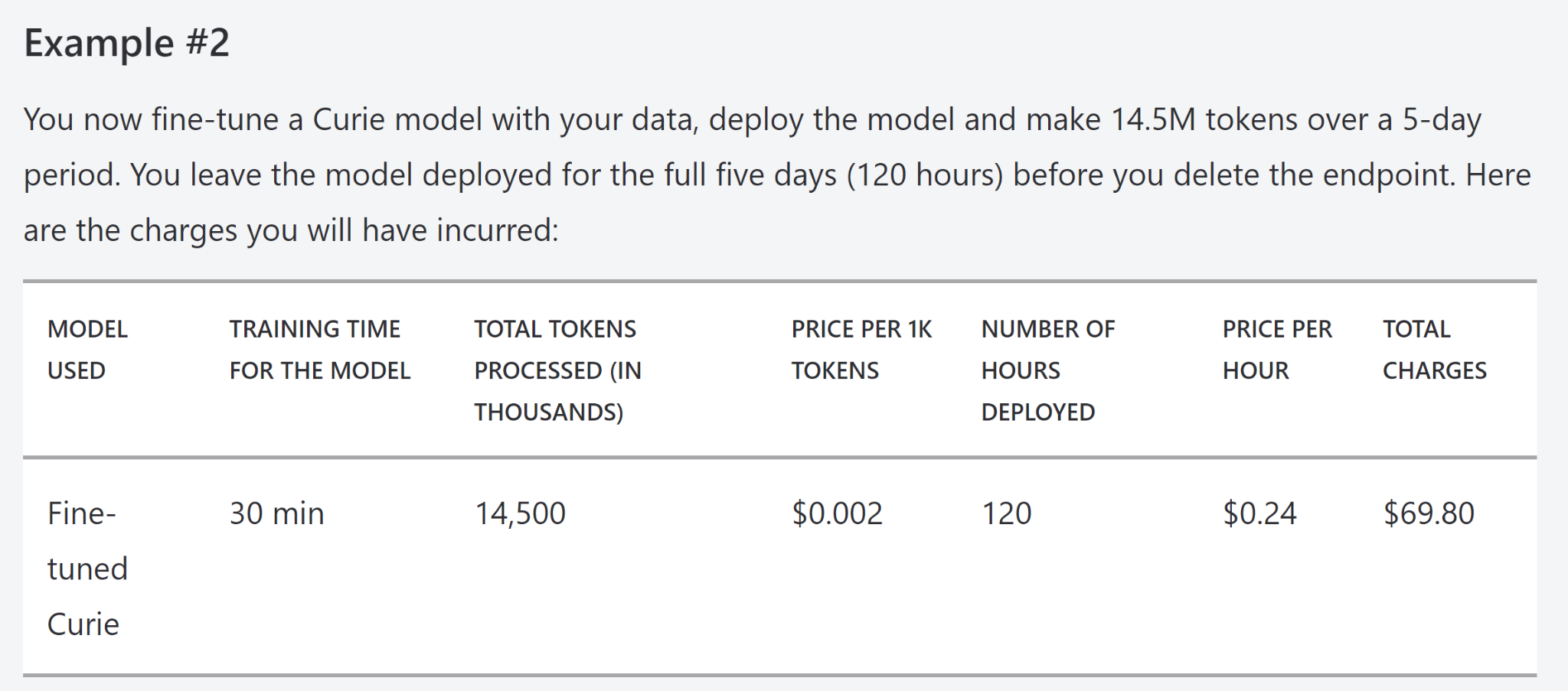

You will also notice a table for standard vs fine-tuned models. Fine-tuned models are custom-trained models, in which you influence the model’s behavior by training it with your own dataset. Fine-tuned models are priced by hour deployed as well as by tokens:

All in all, the pricing structure of Azure OpenAI Services is very similar to the rest of the Azure services. Before its partnership with OpenAI, Microsoft also started offering its Cognitive Language Services — things like sentiment analysis, summarization, and more — which are priced in chunks of 1,000 characters and model training priced by the hour. Other cognitive services like computer vision are priced by ‘transaction’ which is, in almost all cases, the same as an API call.

Another enterprise-friendly LLM provider to check out is Cohere, which recently announced a partnership with Oracle. For its text generation tool, Cohere charges $15 for 1 million tokens. For reference, the model behind ChatGPT, gpt-35-turbo, costs around $2 for 1 million tokens. However, Cohere has more offerings around enterprise security, flexibility, and privacy that might justify this cost.

Cost Minimization

You can do a number of things to minimize the cost you incur from LLM providers:

- Use cost management tools like Microsoft Cost Management

- Choose the right model. Unfortunately, this requires some trial and error, which is worth doing before putting a model into production and incurring high costs for performance that isn’t needed for your use case. Again, costs vary greatly. Gpt-35-turbo is 10 times less expensive than GPT-4, and (in most cases) just as good.

- Reduce the prompt length. Providers will charge based on the length of your prompt and the output.

- Limit maximum response length in your prompt itself.

- Consolidate prompts by combining information with questions and requests, in order to reduce the prompt length and number of outputs required.

- Consider using prompt management software or token cost tracking software.

Final Thoughts

This analysis should increase clarity around LLM pricing. The field of generative AI is still very new, and pricing is bound to change in the future. The main point to emphasize is the need to get hands-on with different models and different providers before jumping into production, to not only select the right model to minimize the cost but also to understand how your business will be charged for your unique use case.

Different providers also have different strengths. OpenAI’s partnership with Microsoft brings it right into Azure. Anthropic places more value on AI safety and ethics. Cohere’s products are designed for the enterprise. It also seems like a new AI startup is coming out every day. All in all, it’s important for companies considering building AI products or embedding AI into their offering to look at multiple providers — including startups — to better manage cost but also make sure organizational values are aligned with your provider.